Voice assistants are now essential for businesses in 2025, with tools needed to handle American English's unique challenges like accents, slang, and US-specific formats (e.g., MM/DD/YYYY, dollar pricing). Here's a quick breakdown of the top tools for building voice assistants:

- Google Dialogflow: Advanced conversational AI with seamless integrations and US English optimization. Free tier available.

- Amazon Lex: Combines speech recognition and natural language understanding, ideal for AWS ecosystems. $0.004/request.

- OpenAI Whisper: Open-source speech-to-text system with high transcription accuracy for US English.

- Synthflow: No-code platform for creating voice assistants, tailored for multi-channel deployment.

- Lindy: Focused on automating enterprise workflows with voice assistants.

- Rasa: Open-source framework offering full control over conversational AI, suited for enterprise needs.

- Microsoft Azure Bot Service: Deep integration with Microsoft tools, scalable for large deployments.

- Mozilla DeepSpeech: Open-source speech recognition engine, though archived as of June 2025.

- Google Cloud Text-to-Speech: Converts text into lifelike speech, supporting multiple US English voices.

- All Top AI Tools: A curated directory to explore AI tools, including voice assistant platforms.

These platforms vary in complexity, customization, and cost, catering to different project needs - from quick prototypes to enterprise-level solutions.

How I Made An AI VOICE AGENT [Quick & Easy] - Synthflow AI Tutorial

Quick Comparison

| Tool | Focus Area | US English Optimization | Integration Options | Starting Price |

|---|---|---|---|---|

| Google Dialogflow | Conversational AI | Strong | Google Cloud, webhooks | Free tier, $0.002/request |

| Amazon Lex | Text & voice interactions | Strong | AWS services, APIs | $0.004/request |

| OpenAI Whisper | Speech-to-text | Strong | Python API, REST | Free (open source) |

| Synthflow | No-code voice assistants | Strong | REST APIs, CRM connectors | Contact for pricing |

| Lindy | Workflow automation | Strong | APIs, webhooks | Contact for pricing |

| Rasa | Enterprise conversational AI | Strong | Self-hosted, cloud deployment | Free (open source) |

| Microsoft Azure Bot Service | Multi-channel bots | Strong | Microsoft tools, APIs | $0.50/1,000 messages |

| Mozilla DeepSpeech | Speech recognition | Moderate | Open-source, TensorFlow | Free (archived) |

| Google Cloud Text-to-Speech | Text-to-speech synthesis | Strong | REST APIs, client libraries | $4.00/million characters |

| All Top AI Tools | Tool directory | N/A | N/A | $29/month |

Choose based on your project’s scale, technical expertise, and budget. For simple setups, no-code tools like Synthflow work well. For greater control, Rasa or Whisper may be better options.

1. All Top AI Tools

Compiled by John Rush, All Top AI Tools is a carefully organized directory that brings together some of the best AI tools across various categories, including Assistant, Audio, and Programming. It’s a fantastic resource for anyone diving into voice assistant projects, as it simplifies the process of researching and selecting the right tools for your needs.

Once you've explored this directory, consider checking out Google Dialogflow to tap into advanced conversational AI features.

2. Google Dialogflow

Google Dialogflow, powered by Google Cloud, is a conversational AI platform designed to help developers create voice assistants. It leverages natural language understanding to turn user requests into actionable data.

Natural Language Understanding (NLU) Capabilities

Dialogflow uses advanced intent recognition to figure out what users are asking for, even if they phrase their requests in different ways. For example, whether someone says, "I need to book a flight" or "Can I reserve a ticket", the platform identifies the same intent. It also pulls out critical details - like recognizing "New York" as a destination or "Friday" as a date - ensuring the conversation flows naturally across multiple exchanges. Additionally, it comes with built-in small talk capabilities, so the assistant can handle casual interactions without requiring developers to code every response.

Integration Options

Dialogflow integrates seamlessly with a variety of platforms, including messaging apps, phone systems, and web applications. It offers built-in connections to popular services and a robust API for creating custom integrations. For voice-based solutions, its telephony integrations allow direct setup with phone systems, making it a great choice for customer service hotlines or automated support. The platform also supports webhooks, enabling real-time connections to external databases, CRM tools, or other business systems.

Customization and Scalability

Developers can fine-tune Dialogflow by adding custom training phrases and entities tailored to their specific needs. Since it’s cloud-based, the platform handles scaling automatically - whether you're building a small prototype or managing enterprise-level traffic. Features like version control and environment management make it easy to test and roll out updates without disrupting live interactions. This flexibility makes Dialogflow a solid choice for creating voice assistants tailored to US-based audiences.

Support for US English

Dialogflow is optimized for US English, with models designed to understand American accents and common slang. This ensures user interactions feel smooth and natural for people in the United States.

3. Amazon Lex

Amazon Lex, powered by the same deep learning technology behind Alexa, facilitates both text and voice interactions. It combines automatic speech recognition (ASR) with natural language understanding (NLU) to create voice assistants capable of managing conversational AI systems.

Natural Language Understanding (NLU) Capabilities

Lex processes user input to identify intent, learns variations in phrasing from limited examples, and handles multi-turn conversations by keeping track of context. On June 12, 2025, Amazon introduced an upgraded LLM-Assisted NLU, designed to better manage complex or error-prone inputs. This enhancement enables Lex to interpret lengthy or intricate requests, handle minor errors, and extract key details from verbose inputs - all while requiring minimal training data.

Integration Options

Lex integrates effortlessly with AWS services and connects easily to phone systems, web apps, and messaging platforms using APIs and webhooks. A great example is TransUnion, which saw impressive results after implementing Amazon Lex: wait times dropped from 2 minutes to just 18 seconds, transfer rates were cut in half, and annual contact center costs were reduced by 40%.

Customization and Scalability

Lex is designed to scale automatically, whether you're working on a prototype or deploying at an enterprise level. Developers can train it using industry-specific terms and set it up to prompt users for missing details. For instance, ROYBI Inc. leveraged Lex to enhance its conversational interface in early-childhood education robots, delivering personalized learning experiences through natural voice interactions.

Localization and Support for US English

Amazon Lex is optimized for US English, effectively handling American accents and colloquial expressions. The LLM-Assisted NLU feature also supports multiple locales, including all US English variants. To ensure the best results, Amazon advises using clear and descriptive intent names (e.g., "BookFlight") and providing detailed descriptions for each custom intent and slot. Note that support for Lex V1 will officially end on September 15, 2025, so all new projects should transition to Lex V2.

"Amazon Lex provides the deep functionality and flexibility of natural language understanding (NLU) and automatic speech recognition (ASR) so you can build highly engaging user experiences with lifelike, conversational interactions, and create new categories of products." - Amazon Web Services

Next, let’s dive into OpenAI Whisper for the latest in speech-to-text technology.

4. OpenAI Whisper

OpenAI Whisper is an advanced ASR (Automatic Speech Recognition) system designed to transform audio into text with remarkable accuracy. It serves as a strong base for building voice-assisted applications by delivering dependable transcription capabilities.

Integration Options

Developers have two main ways to work with Whisper. For those who prefer complete control, the open-source Whisper model can be downloaded and run locally. This approach ensures full autonomy over data and processing. Alternatively, OpenAI offers API access for a cloud-based solution, allowing developers to tap into Whisper's transcription power without managing infrastructure. The system supports integration through popular programming languages like Python, JavaScript, and Node.js, making it adaptable for a wide range of applications.

Customization and Scalability

Whisper is highly versatile, supporting multiple audio formats and handling everything from short voice commands to lengthy recordings. The model comes in different sizes, ranging from a compact "tiny" version for resource-limited environments to a larger model that prioritizes accuracy. This flexibility allows developers to choose the right balance between performance and computational efficiency.

Localization and Support for US English

Whisper is fine-tuned for US English, ensuring it transcribes spoken content - like numbers, dates, and measurements - according to US standards. This makes it especially useful for applications that require outputs tailored to American conventions.

Next, explore how Synthflow approaches conversational AI development.

5. Synthflow

Synthflow is a no-code conversational AI platform designed to help developers and businesses create voice assistants without writing a single line of code. It’s built to manage dialogue flows across various channels, making it a great fit for customer service and business automation tasks.

Natural Language Understanding (NLU) Capabilities

Synthflow’s NLU engine is built to process conversational context with precision. It identifies key elements like intent, entities, and sentiment, allowing voice assistants to differentiate between a straightforward request for product details and a user expressing frustration. Additionally, its conversation memory feature remembers past interactions, so users don’t have to repeat themselves.

Integration Options

As a cloud-based service, Synthflow provides a variety of integration options. It supports REST APIs, webhooks, and CRM connectors, making it easy to connect voice assistants with existing business systems. It also integrates seamlessly with telephony systems, web chat widgets, and SMS, enabling deployment across multiple communication channels.

Customization and Scalability

You can design dialogue flows with its intuitive drag-and-drop interface or add custom logic using JavaScript and APIs. Synthflow is built to scale, using load balancing and adaptive routing to handle operations of any size. Its pricing structure is flexible, accommodating businesses large and small.

Localization and Support for US English

Synthflow’s voice recognition and text-to-speech tools are specifically optimized for American English. They handle local accents and pronunciation effortlessly, while formatting numbers, dates, and currency according to US standards. The platform also incorporates terminology and references familiar to American businesses, ensuring a seamless user experience.

Next, we’ll dive into how Lindy is pushing the boundaries of voice assistant technology.

sbb-itb-bce18e5

6. Lindy

Lindy is an AI-driven platform designed to streamline enterprise workflows through automation. It specializes in creating voice assistants that handle tasks like managing calendars, processing emails, and coordinating team communications.

Natural Language Understanding (NLU) Capabilities

Lindy's NLU technology enables it to interpret user commands, maintain the flow of conversations, and distinguish between various tasks. This makes it particularly effective for scheduling and project management.

Integration Options

The platform supports a range of integration methods, including cloud-native API connectivity, webhooks, and REST APIs. These options allow Lindy to seamlessly connect with business tools, collaboration platforms, and telephony systems, making it easy to set up tailored and scalable workflows.

Customization and Scalability

Lindy includes a visual automation builder that simplifies the creation of voice-activated workflows. Users can also enhance its vocabulary to recognize industry-specific terms and company-specific language. Whether for a single user or an entire organization, Lindy is built to scale. Its adaptive learning capabilities further improve performance over time.

Localization and Support for US English

Lindy is fine-tuned for American English, using the MM/DD/YYYY date format, U.S. currency symbols, and terminology familiar to U.S. businesses. This localization ensures a smooth experience for users in the United States.

7. Rasa

Rasa is an open-core framework designed for enterprise-level conversational AI. It’s built to handle the demands of large-scale deployments, making it a strong fit for US businesses that prioritize speed, scalability, and control. With Rasa Pro, the platform incorporates generative AI to enable more nuanced conversations, all while meeting the strict security and monitoring standards enterprises expect.

Natural Language Understanding (NLU) Capabilities

Rasa’s CALM (Conversational AI with Language Models) framework focuses on Dialogue Understanding (DU), which allows it to interpret intent within the broader context of a conversation. This means it can manage interruptions and multi-turn discussions seamlessly.

By maintaining context throughout complex interactions, Rasa enables natural, back-and-forth exchanges. This contextual awareness ensures that voice assistants can handle topic shifts or revisit earlier points in a conversation, making interactions feel smoother and more human-like.

Integration Options

Rasa offers flexible deployment methods, including on-premise setups for industries with strict data security needs. It integrates easily with enterprise systems like CRMs, ERPs, and knowledge bases through robust APIs, ensuring smooth collaboration with existing tools.

The platform also supports multi-channel content management, allowing businesses to deliver consistent user experiences across voice, chat, and other communication channels. Developers can create voice assistants that complement existing customer service operations without disrupting workflows.

Customization and Scalability

Rasa Studio provides a no-code interface that simplifies the process of building, testing, and refining generative conversational AI assistants. This visual tool promotes collaboration across teams and makes designing voice-first interaction paths more accessible, even for team members without extensive coding knowledge.

Rasa’s architecture is highly customizable, enabling developers to scale their assistants from basic automation to advanced, context-aware systems. Teams can choose which Large Language Models (LLMs) to integrate, giving them full control over the AI’s capabilities.

The platform is built to handle growth, from small-scale applications to organization-wide deployments. With tools for continuous improvement, developers can refine their assistants using real-world interaction data, ensuring better performance over time.

Localization and Support for US English

Rasa is tailored for US English, addressing elements like American speech patterns, colloquialisms, date formats (MM/DD/YYYY), and dollar-based currency. This ensures that voice assistants built with Rasa provide responses that are contextually appropriate for American users, whether they’re in different industries or regions.

Next, we’ll take a look at how Microsoft Azure Bot Service builds on these features.

8. Microsoft Azure Bot Service

Microsoft Azure Bot Service is a cloud-based platform designed to create intelligent voice assistants that seamlessly integrate with Microsoft's suite of tools. Built on Azure's robust cloud infrastructure and AI capabilities, it's a natural fit for businesses already using Microsoft's technologies.

Natural Language Understanding (NLU) Capabilities

Azure Bot Service uses LUIS and Azure Cognitive Services to process natural language effectively, enabling multi-turn conversations while maintaining context. Its Speech Services handle real-time speech-to-text conversion, support various audio formats, and include noise reduction features to manage diverse accents and speaking styles.

Integration Options

One of the standout features of Azure Bot Service is its deep integration with tools like Office 365, Teams, Dynamics 365, and Power Platform. This allows developers to create voice assistants capable of accessing calendar information, retrieving customer records, or automating workflows with minimal effort. Additionally, the platform supports deployment across multiple channels, including:

- Voice-enabled devices

- Web chat

- Mobile apps

- Smart speakers

Developers can build once and deploy across these channels effortlessly. For added flexibility, the platform provides REST APIs and SDKs for .NET, JavaScript, Python, and Java. Pre-built connectors make it easier to integrate with popular business applications, further simplifying the development process.

Customization and Scalability

With Bot Framework Composer, even non-technical users can design conversation flows using a drag-and-drop interface. Azure Bot Service is built to scale automatically, whether you're creating small internal tools or customer-facing assistants serving millions of users. Its pay-as-you-go pricing ensures you only pay for what you use. Additionally, custom neural voice features allow businesses to develop unique voice personas that align with their brand identity.

Localization and Support for US English

Azure Bot Service offers robust localization features tailored to US-specific needs. Its language models are trained on American English, ensuring accurate formatting for dates (MM/DD/YYYY), currency ($1,234.56), and measurements (feet, inches, Fahrenheit). It also adapts to regional pronunciations, idiomatic expressions, and time zones, delivering responses that feel locally relevant and natural.

Up next, take a look at Mozilla DeepSpeech’s open-source approach to voice recognition.

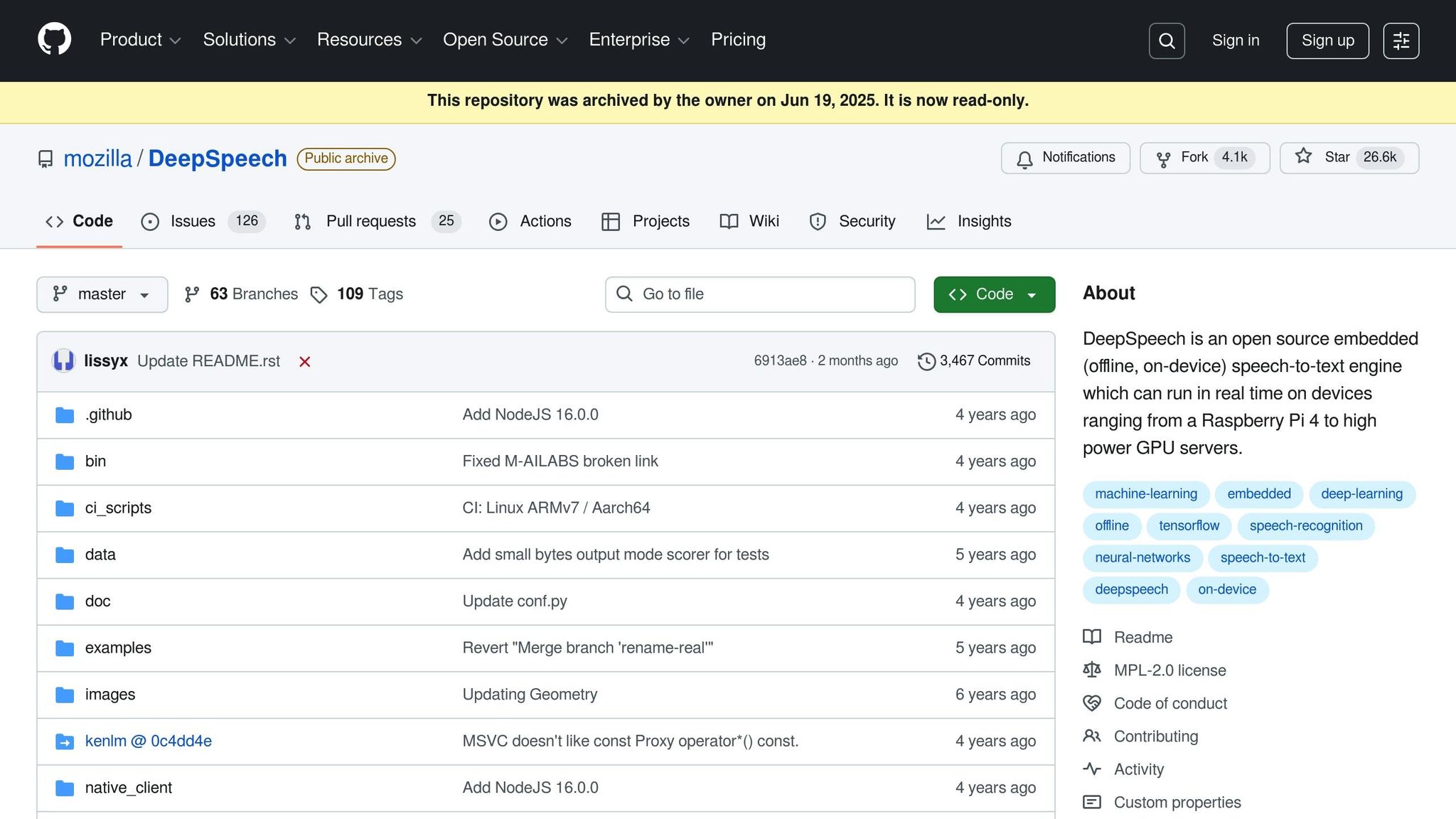

9. Mozilla DeepSpeech

Mozilla DeepSpeech is an open-source speech-to-text engine designed to convert spoken words into text. It's a key Automatic Speech Recognition (ASR) tool for building custom voice-enabled applications.

Important note: As of June 19, 2025, Mozilla has archived the DeepSpeech project, making it read-only. However, developers can still access and work with the existing codebase.

Integration Options

DeepSpeech acts as the ASR component, transforming audio input into text, which can then be processed further using natural language processing tools.

Customization

Being open-source, DeepSpeech offers developers the freedom to modify and adapt the code without worrying about licensing restrictions. Its built-in language model enhances transcription accuracy by predicting likely word sequences. Additionally, it can be deployed on private servers, providing an option to prioritize data privacy.

Up next, take a look at Google Cloud Text-to-Speech and its advanced voice synthesis features.

10. Google Cloud Text-to-Speech

Google Cloud Text-to-Speech wraps up the voice assistant experience by transforming AI-generated text into speech that sounds remarkably human. Using advanced neural network technology, it generates natural audio from text input, making interactions feel more authentic.

The platform supports an impressive range of over 220 voices across 40+ languages and variants. For US English alone, there are multiple options, each offering unique speaking styles and tones. Developers can choose between standard voices or premium WaveNet voices, which provide highly realistic speech output.

Integration Options

Google Cloud Text-to-Speech is designed for easy integration. It connects via REST APIs and client libraries compatible with popular programming languages like Python, Java, Node.js, and C#. This cloud-based service can be incorporated into web applications, mobile apps, and IoT devices.

The platform also takes care of audio format conversions, supporting MP3, WAV, and OGG. When paired with other Google Cloud tools - such as Dialogflow for natural language understanding or Google Cloud Speech-to-Text for processing spoken input - it creates a seamless voice assistant pipeline.

Customization and Scalability

Developers have extensive control over how the generated speech sounds. Voice tuning parameters allow adjustments to speaking rate, pitch, and volume. The service also supports Speech Synthesis Markup Language (SSML), enabling precise control over pronunciation, pauses, emphasis, and even audio effects.

Scalability is another strength. The platform can handle anything from a single request to millions per day, automatically adjusting to meet demand. Pricing is flexible, starting at $4.00 per million characters for standard voices and $16.00 per million characters for WaveNet voices, making it accessible for projects of all sizes - from small-scale apps to enterprise-level solutions.

Localization and Support for US English

Google Cloud Text-to-Speech is tailored for US audiences, offering highly accurate and culturally appropriate speech output. It includes multiple US English voice options with variations in gender, age, and tone, catering to diverse use cases like virtual assistants or formal announcements.

The platform ensures natural-sounding American accents and handles US-specific terms, measurements, and cultural references effortlessly. It automatically applies American English spelling conventions and formats for dates, numbers, and currency.

Advanced features include text normalization, which converts symbols, abbreviations, and numbers into clear, spoken phrases. For instance, "$1,234.56" is vocalized as "one thousand two hundred thirty-four dollars and fifty-six cents", following proper American pronunciation. This attention to detail ensures a polished and professional user experience.

Feature Comparison Table

Here’s a quick-reference table summarizing the strengths of various tools. It highlights their features, pricing, and capabilities, making it easier to compare them side by side. Use this overview alongside the detailed tool profiles above to find the best fit for your project.

| Tool | NLU Capabilities | US English Support | Integration Options | Customization Level | Starting Price |

|---|---|---|---|---|---|

| All Top AI Tools | Advanced machine learning models | Native US English optimization | REST APIs, SDKs, webhooks | High – custom training data | $29/month |

| Google Dialogflow | Built-in entity recognition | Excellent with regional variants | Google Cloud, Firebase, webhooks | Medium – pre-built agents | Free tier, then $0.002/request |

| Amazon Lex | AWS Comprehend integration | Strong US English processing | AWS services, Lambda functions | High – custom slot types | $0.004/request |

| OpenAI Whisper | Speech-to-text focused | Robust US accent recognition | Python API, REST endpoints | Medium – model fine-tuning | Free (open source) |

| Rasa | Custom NLU pipeline | Configurable language models | Self-hosted, cloud deployment | Very High – full code control | Free (open source) |

| Microsoft Azure Bot Service | LUIS integration | Microsoft Speech Services | Teams, Slack, web channels | High – custom dialogs | $0.50/1,000 messages |

| Mozilla DeepSpeech | Speech recognition only | US English model available | TensorFlow, mobile SDKs | High – model training | Free (open source) |

| Google Cloud Text-to-Speech | Text-to-speech only | Multiple US voice options | REST APIs, client libraries | Medium – SSML support | $4.00/million characters |

Key Feature Breakdown

Here’s a closer look at the main features and how they differ:

- NLU and Customization: Tools like Rasa provide full control with custom NLU pipelines, while Google Dialogflow and Amazon Lex offer pre-trained models that work well out of the box. OpenAI Whisper and Mozilla DeepSpeech focus on specific tasks like speech recognition but allow for fine-tuning.

- US English Support: Options range from native optimization to configurable language models, ensuring tools can adapt to regional nuances.

- Integration Options: Some platforms, like Google Dialogflow, offer plug-and-play integrations, while others, such as Rasa and Mozilla DeepSpeech, require more technical expertise but allow for deeper customization.

- Pricing Models: Open-source tools like Rasa, OpenAI Whisper, and Mozilla DeepSpeech are free but may involve infrastructure costs. Pay-as-you-go platforms like Google Dialogflow and Amazon Lex are affordable for smaller projects, scaling with usage.

- Development Speed: No-code platforms enable quick prototyping, while custom solutions like Rasa or Mozilla DeepSpeech may require more time but deliver tailored functionality for complex projects.

For specialized needs, high customization is key. For example, All Top AI Tools offers a balance of ease and flexibility through visual interfaces, while enterprise solutions like Microsoft Azure Bot Service allow for deep integration with business systems. Whether you need rapid prototyping or a fully customized implementation, there’s a tool that fits your timeline and project scope.

Conclusion

When selecting a voice assistant tool, it's essential to weigh your project needs, technical skills, and budget. The tools discussed above each bring unique strengths, making it easier to align their features with your specific goals.

Platforms like All Top AI Tools and Google Dialogflow stand out for their natural language processing capabilities and relatively easy learning curve. These are great options for teams looking to deliver results quickly. On the other hand, tools like Amazon Lex are designed to integrate seamlessly with AWS, making them ideal for projects already leveraging Amazon's cloud ecosystem.

For those seeking extensive customization, open-source tools such as Rasa, OpenAI Whisper, and Mozilla DeepSpeech provide unmatched flexibility. However, they come with added technical demands and infrastructure costs, making them better suited for organizations with dedicated AI teams or strict privacy and compliance requirements.

Budget considerations also play a major role. Pricing models vary widely - some platforms offer pay-as-you-go options, others require subscriptions, and some shift costs toward infrastructure rather than licensing. Understanding these structures can help you make a cost-effective decision.

Integration is another key factor. Tools like Dialogflow, Azure Bot Service, and Rasa excel in connecting with popular business systems, ensuring smooth workflows and operational efficiency.

Most of these tools are optimized for American English, effectively handling regional accents and nuances, which is particularly important for US-based users.

Ultimately, choosing the right tool depends on balancing your immediate project needs with long-term plans. Pre-built solutions work well for simpler conversational interfaces, while more complex projects requiring custom training data benefit from flexible frameworks. By clearly identifying your priorities - whether it's budget, timeline, customization, or integration - you can select the tool that best supports your voice assistant development goals.

FAQs

What should I look for in a voice assistant development tool for my business in 2025?

When selecting a voice assistant development tool in 2025, it's important to focus on scalability, customization, and integration capabilities. The right tool should work smoothly across different platforms, support advanced AI features like contextual understanding, and let you create experiences tailored specifically to your users' needs.

It's also crucial to choose tools that prioritize privacy and security to safeguard user data. Look for options that integrate seamlessly with your current systems and align with U.S. localization standards - such as language preferences, imperial measurements, and cultural norms - to ensure the experience feels intuitive and user-friendly.

What are the key differences between Rasa and OpenAI Whisper in terms of customization and technical requirements?

Rasa provides a high level of flexibility, making it a strong option for building voice assistants tailored to very specific domains. That said, it does come with a steep learning curve. Setting it up and managing it involves technical tasks like configuring intent recognition and overseeing natural language processing (NLP) components.

On the flip side, OpenAI Whisper focuses on simplicity and ease of use, delivering impressive performance right out of the box along with support for multiple languages. While it’s easier to get started with, customizing it for specialized use cases still requires advanced knowledge, such as training the model using custom datasets.

Ultimately, the choice between these two platforms depends on the complexity of your project and the technical expertise of your team.

What advantages do no-code platforms like Synthflow offer small businesses for creating voice assistants?

No-code platforms like Synthflow make it possible for small businesses to build voice assistants quickly and at a lower cost - no advanced technical skills required. These tools streamline the development process, making it easier to launch, update, and customize voice assistants as needed.

By eliminating the need for specialized developers, no-code platforms open the door for non-technical team members to work with AI technology. This not only speeds up progress but also helps cut down on operational expenses. For small businesses, this kind of flexibility allows them to respond quickly to customer demands and remain competitive in an ever-evolving market.